Manual Terraform Reviews Don't Scale. Here's What Does.

Let's lay out the scenario:

- Your team just hit 50+ deployments per week.

- Suddenly, your senior engineers are spending 60% of their time being human Terraform interpreters instead of building new systems.

- Something's broken.

The Math Problem Nobody Talks About

Let's be honest about what manual Terraform reviews look like here even with traditional tools.

The reality:

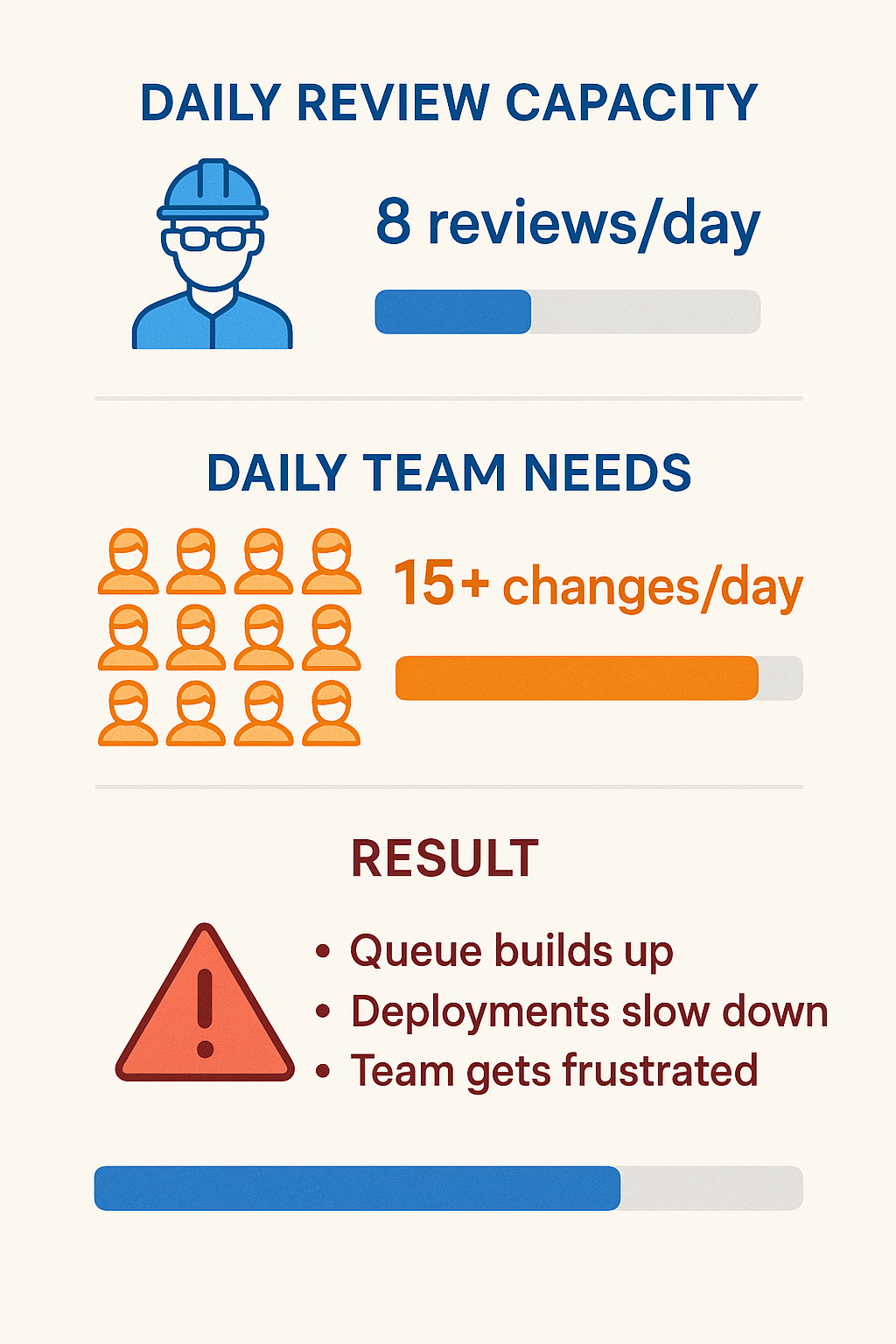

→ one senior engineer can thoroughly review ~8 complex changes per day

→ Your team needs to ship 15+ changes per day

→ Queue builds up, deployments slow down, and everyone gets frustrated

The shortcuts teams take:

→ "LGTM" (looks good to me), approvals without thorough analysis.

→ Junior engineers are often hesitant to work on infrastructure.

→ Critical changes were rushed through without proper review.

→ That sinking feeling every time someone runs 'terraform apply'.

The bottleneck isn't your tools. It's your process.

We're Still Doing Infrastructure Reviews Like It's 2015

Here's the thing:

We treat Terraform plans like code reviews, but infrastructure isn't code.

Infrastructure has:

→ Real-time dependencies that span your entire AWS account

→ Cost implications that aren't visible in the plan

→ Blast radius considerations that require live context

→ State drift possibilities that plans don't show

→ Cross-service impacts that no human can mentally calculate

A senior engineer can spot obvious mistakes—missing quotes, typos in resource names, and basic syntax errors - but they can't mentally process the dependency graph of your entire infrastructure stack. They can't predict which upstream services depend on that IAM policy.

They definitely can't tell you that changing an instance type will trigger a 5-minute downtime window for three downstream services. Yet that's precisely what we ask them to do dozens of times per day.

The Review Theater Problem

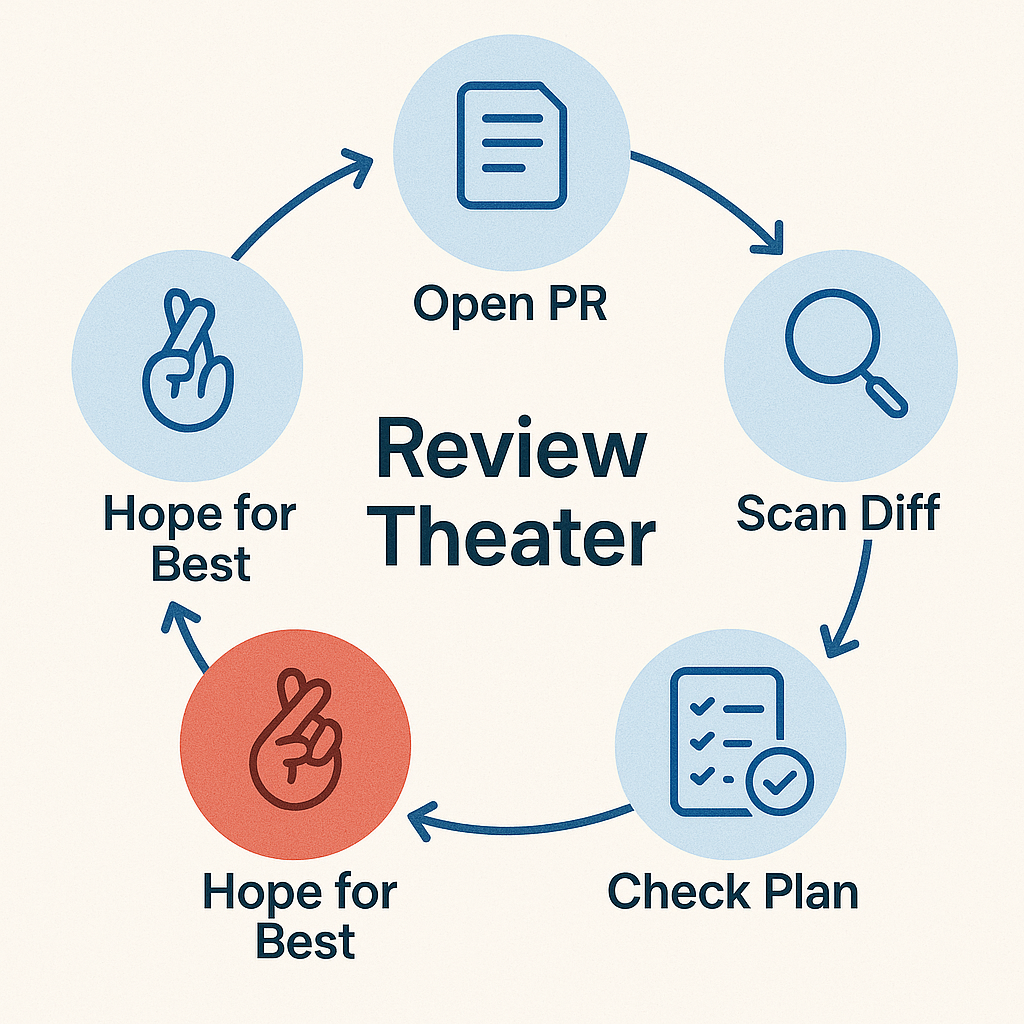

Most infrastructure reviews follow this pattern:

Step 1: The Engineer opens a PR with 200+ lines of Terraform changes

Step 2: Reviewer scrolls through the diff, looking for anything dangerous

Step 3: Reviewer checks if the plan seems reasonable (whatever that means)

Step 4: Reviewer approves because they don't want to block progress

Step 5: Everyone hopes for the best

This isn't rigorous engineering.

This is Review Theater.

The scariest part is that the reviewer often has less context than the person who wrote the code. They're reviewing changes to modules they didn't write in environments they don't fully understand based on incomplete information.

What Manual Reviews Miss (Every Single Day)

Here are real examples of issues that slip through manual reviews:

State Drift: Your plan looks clean, but that RDS instance was manually scaled up last week to handle a traffic spike. Your "minor update" will scale it back down and cause an outage.

Hidden Dependencies

You're updating an IAM policy. The reviewer doesn't realize that six different services use this policy across three teams. Your change breaks all of them.

Resource Recreation

You changed a single tag. Looks harmless. But due to a provider quirk, this triggers a destroy-and-recreate for a stateful resource with 3TB of data.

Cost Implications

You're provisioning what appears to be a standard setup. The reviewer doesn't notice that you're using the wrong instance family in the wrong region, resulting in a $3,000/month surprise instead of the expected $200/month resource.

Blast Radius

You're updating networking rules, and the change looks isolated, but it affects the shared infrastructure that 12 applications depend on.

These aren't edge cases.

They're on Tuesday afternoon.

The Cognitive Load Problem

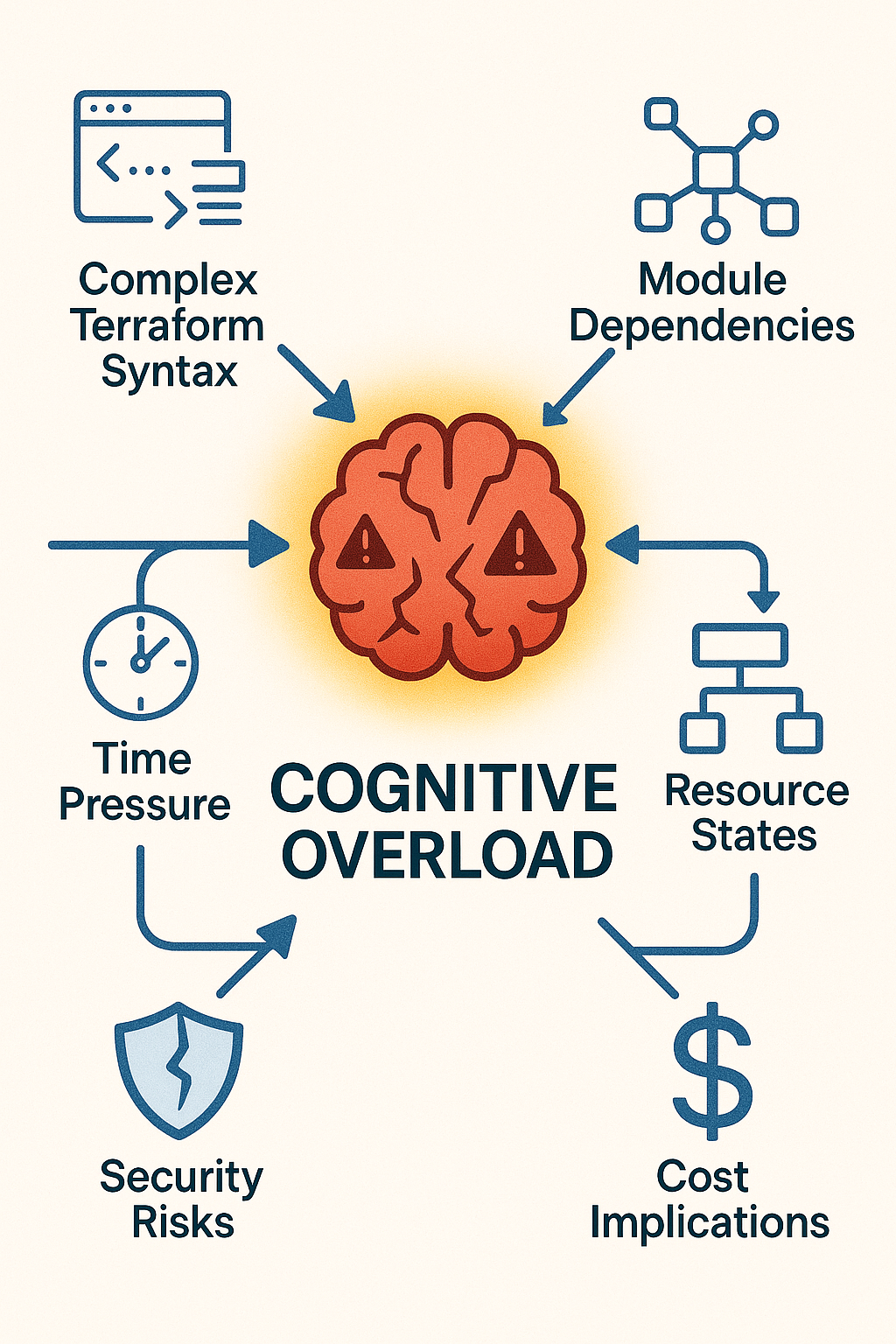

Think about what we're asking reviewers to do:

→ Parse complex Terraform syntax and modules they didn't write

→ Mentally map code changes to real infrastructure impact

→ Remember the state of dozens of interconnected resources

→ Predict failure modes across multiple cloud services

→ Calculate cost and performance implications

→ Do this consistently, under time pressure, multiple times per day

No human can do this reliably, and even the most skilled engineers occasionally miss things. Companies need to realize that that's not a skills problem, but a workflow and process problem.

AI-Native Workflows: The Solution That Works

The future isn't faster than humans reviewing Terraform. It's AI-native workflows that provide the context humans need to make confident decisions. Instead of asking, "Can a human understand this plan faster?"

Ask, "Can AI provide better context about what this change means?"

Purpose-built AI that:

→ Understands your live infrastructure state, not just your code

→ Calculates real blast radius by analyzing actual dependencies

→ Explains changes in plain English for any team member

→ Catches the subtle state and configuration issues humans miss

→ Provides cost and performance predictions based on real usage patterns

This isn't about replacing engineers, but about augmenting every team member, junior dev to principal engineer, with the contextual feedback they need to make confident infrastructure decisions and bring the company one step closer to its business strategic initiatives.

What AI-Native Reviews Look Like

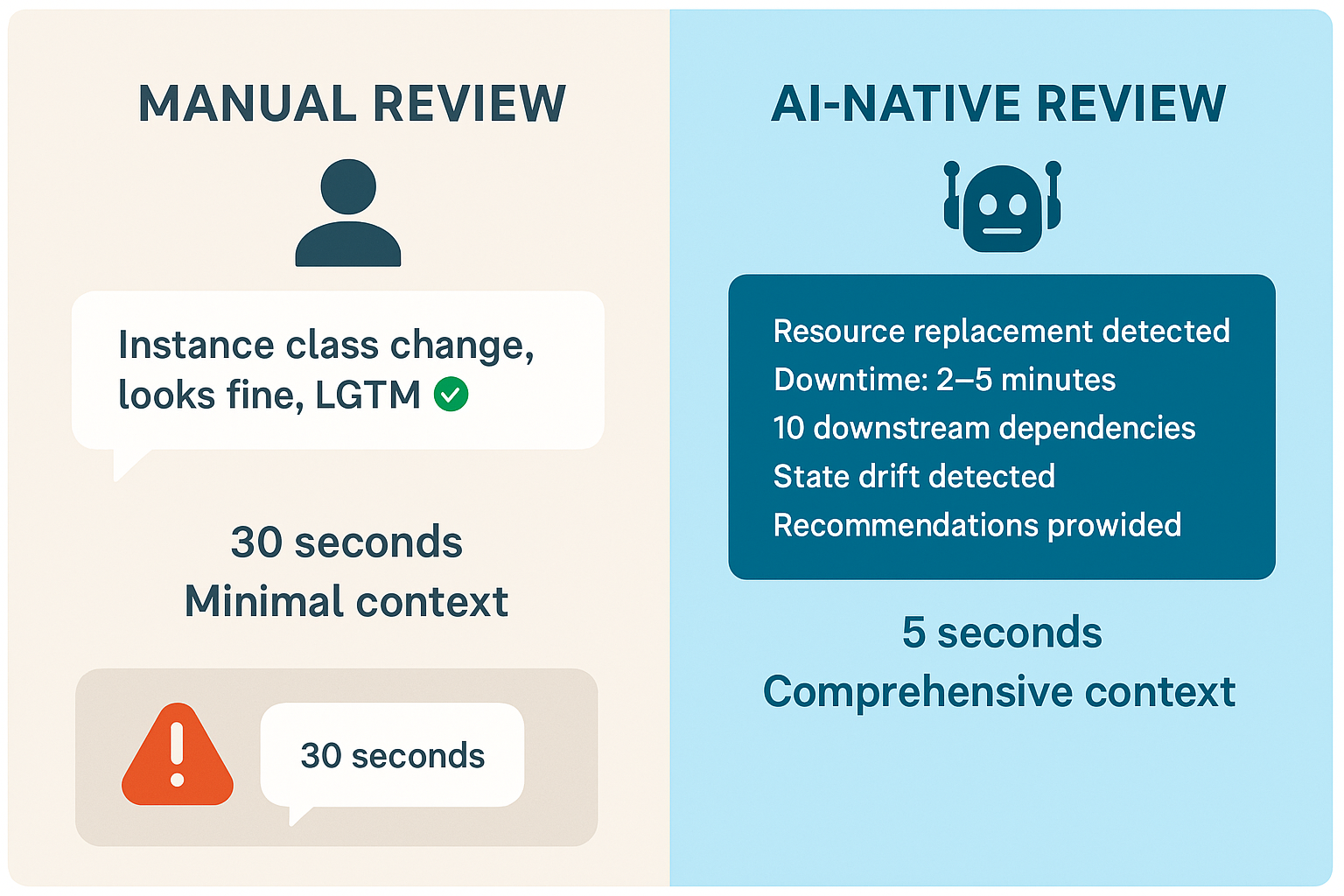

Let's say someone opens a PR changing an RDS instance class:

instance_class = "db.t3.medium" → "db.m5.large"Manual review thinking:

"Instance class change looks fine, LGTM."

AI-native review analysis:

"This change will trigger a resource replacement for prod-db. Estimated downtime: 2-5 minutes. This instance has 10 downstream dependencies, including the payment service and user authentication. The current instance has drifted from its state (someone manually enabled backup encryption). Consider using apply_immediately=false or exploring the modified path instead."

One gives you approval, and the other gives you confidence.

Beyond Code: Understanding Infrastructure Intent

Traditional code review tools show you what's changing in your Terraform files, while AI-native IaC workflows understand what's changing in your infrastructure before the changes are even deployed.

Terracotta AI can tell you:

→ What does this change do in business terms

→ Which teams and services will be affected by the change

→ How much will you cost based on your usage patterns

→ What risks are being taken and how to mitigate them

→ Whether this change has been tried before, and what happened

This is the difference between reviewing syntax and reviewing intent. The combination of both is a game changer in the world of Infrastructure as Code deployments.

The Path Forward

Manual Terraform reviews don't scale because humans aren't proficient in interpreting Terraform plans. The solution isn't teaching humans to be better at parsing HCL syntax. It's building workflows that bridge the gap between code and infrastructure reality.

→ AI-native Infrastructure as Code workflows give teams the following:

→ Speed - No more waiting days for someone to decipher a complex plan

→ Confidence - Context about what changes mean and what could go wrong

→ Education - Junior engineers learn from AI explanations instead of guessing

→ Reliability - Consistent analysis that doesn't depend on who's available to review

The future of Infrastructure as Code (IaC) isn't faster manual reviews, but intelligence-augmented IaC workflows that scale with your team's and infrastructure's complexity.

At Terracotta AI, we're building exactly this, a purpose-built IaC AI that analyzes and optimizes Terraform pull requests in the context of your code, local planned state, remote state, and live running resources.

Ready to see what AI-native Infrastructure as Code reviews look like?

Comments ()