Real-Time Natural Language Guardrails for Terraform Modules: Because Every Company Customizes Them and Platform Teams Absorb the Pain

How Terracotta AI turns module intent into enforceable guardrails, with a real PR example

If you have ever owned Terraform modules in a real engineering organization, you already know something that most companies will not say publicly. The problem is not Terraform. The problem is not even infrastructure complexity. The problem is that once custom modules leave the platform team and fall into the hands of dozens or hundreds of developers with varying levels of context, experience, and priorities, they can become fragmented.

Developers treat modules as Legos. Platform teams treat them as safety systems.

This gap becomes a source of friction, misuse, repetitive Slack questions, late-night incident reviews, and a maintenance burden that grows every quarter.

This article digs into the hidden challenges behind module ownership, explains why the friction grows over time, and shows how Terracotta AI lets platform teams enforce best practices and intent automatically in pull requests. The NAT Gateway example below walks through a real guardrail and a real PR violation, with screenshots to anchor the experience.

The Hidden Maintenance Burden of Custom Modules (Full Breakdown)

Platforms build modules to achieve standardization, safety, and speed. But as organizations scale and new services emerge, your modules slowly become the foundation everyone relies on, yet few actually understand their purpose, their usage, and so forth. Developers tend to treat modules as configurations rather than systems. With enough drift and enough time, the module becomes a black box that only the platform team can truly understand, maintain, and enforce.

Let's break down the core pressures that create this mess.

1. Developers treat variables as suggestions, not contracts

Platform teams, or any team creating custom modules, carefully choose defaults, guard some settings behind variables, and structure modules to create a predictable infrastructure. Developers see a variable and assume it is safe to override. They often do not understand the cost, redundancy, or compliance assumptions baked into each choice.

A classic example is the NAT Gateway setting.

Production environment expects high availability.

Developers are told to find "cost savings" wherever possible.

One variable can swing both.

2. Modules get forked into "one-off" versions that never return

Even if you have an internal registry, modules eventually get copied. A team forks yours with a minor tweak, then another team copies their version, then ops discovers three different NAT strategies across four environments.

The platform team always becomes the integration point for these divergent versions.

3. A Terraform module does not communicate intent on its own

Terraform modules describe configuration possibilities. They do not explain why something is a default, when a variable should never be touched, or what downstream systems depend on a specific value.

This turns into:

- misaligned assumptions

- Incorrect overrides

- Silent breaking changes

- Differences between the staging and production modules that no one notices until the outage

Intent lives in platform engineers' heads, not in code.

4. Standards are documented, not enforced

Every platform has a document or catalog entry that describes how modules should be used. No one reads it, and if they do, in come the Slack questions. New hires do not even know it exists. Teams use modules inconsistently, and the platform team manually corrects mistakes during PR review. Documentation without enforcement becomes a historical artifact rather than a control mechanism.

5. Manual PR review becomes the safety net for the entire company

Every variable override, every version bump, every weird module instantiation eventually lands in a PR that you are expected to review. You are the stopgap that protects production from misuse.

This is not sustainable at scale.

Why This Problem Gets Worse as Companies Grow

Customization is not malicious. It is a symptom of scale.

As you grow:

- more microservices

- more developers with varied experience

- more environments

- more compliance requirements

- more module versions

- more undocumented edge cases

- more time pressure

The platform team becomes a bottleneck because the system depends on you to interpret intent for every change. The wider the engineering org becomes and the more democratized Terraform becomes through self-service implementation, the harder it is to maintain consistent infrastructure behavior across teams.

This is the exact moment when module governance turns from a best practice into a constant firefight.

The Missing Piece: Expressing Module Intent Directly in PRs

The core issue is simple.

Modules encode configuration, not intent.

The documentation explains the intent, but no one reads it.

PR reviews enforce intent, but reviews do not scale.

Terracotta AI bridges all three by allowing platform teams to define intent in natural-language Policy as Code (i.e., Guardrails) and automatically enforce it in every pull request that touches Terraform or CDKTF.

The platform team writes guardrails like:

If the environment variable is set to prod, make sure the "single_nat_gateway" is set to false. Setting this to false allows multiple NAT gateways, making production NAT instances highly available and redundant.

Terracotta AI interprets this rule and enforces it proactively in PRs before your CI triggers, and it runs tests like Validate, Plan, or Apply.

The intent is preserved, enforcement is automatic, and the context is visible to the developer AND the platform engineering team in real time.

This is the workflow platform teams have tried to build manually for years.

The Guardrail Example: NAT Gateway Redundancy in Production

To illustrate the workflow, I'd like to walk through a REAL-WORLD example we use to deploy our OWN infrastructure here at Terracotta AI.

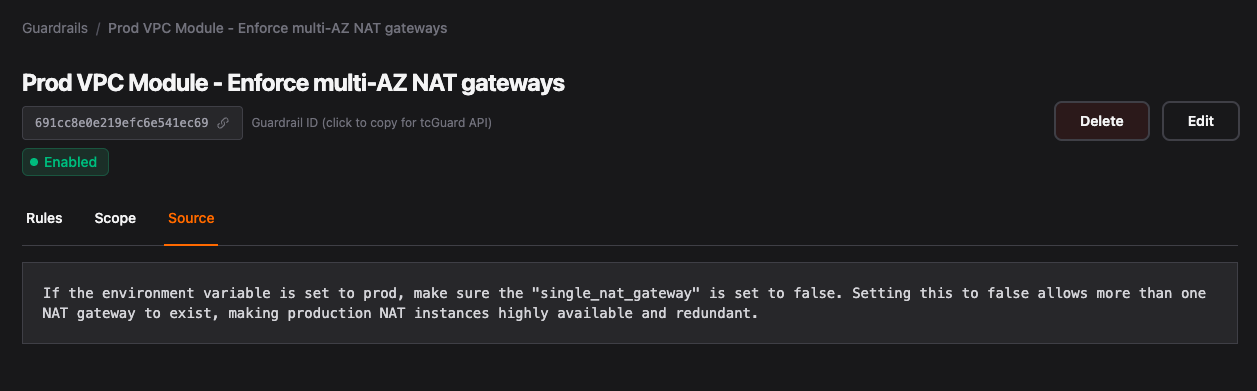

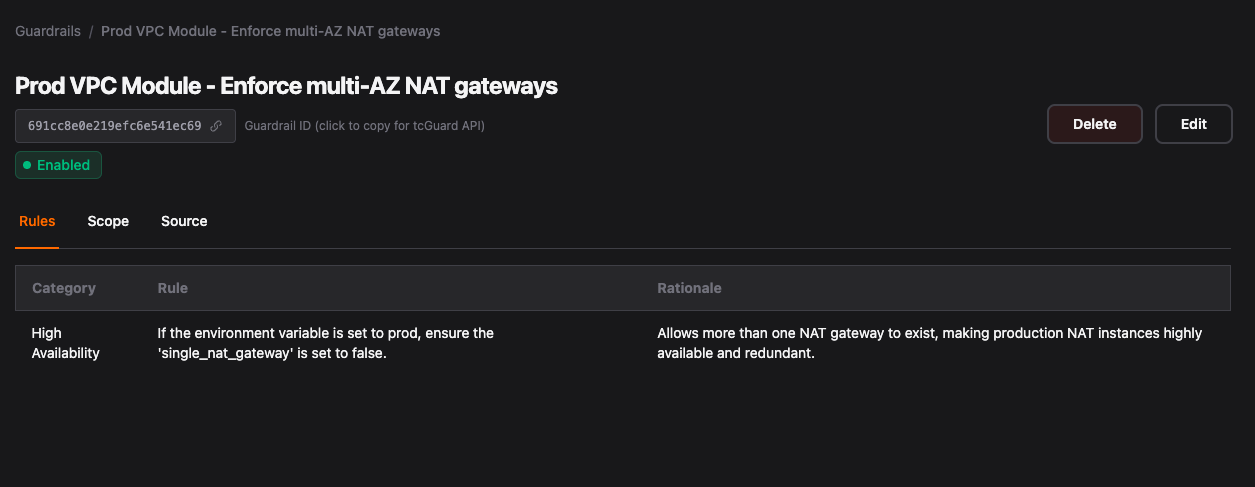

Step 1. You Write a Natural Language Policy in Terracotta

Your rule:

If the environment variable is set to prod, make sure the "single_nat_gateway" is set to false. Setting this to false allows multiple NAT gateways, making production NAT instances highly available and redundant.

This instantly becomes a structured guardrail that Terracotta AI can enforce.

No custom code.

No Terraform Cloud policy engine.

No Rego.

No maintenance overhead.

Step 2. You Open a PR That Violates the Guardrail

Your variable:

variable "single_nat_gateway" {

description = "Use single NAT gateway to reduce costs (suitable for dev or staging)"

type = bool

default = true

}In a production context, this default creates a single point of failure. This is precisely the kind of oversight that leads to real outages.

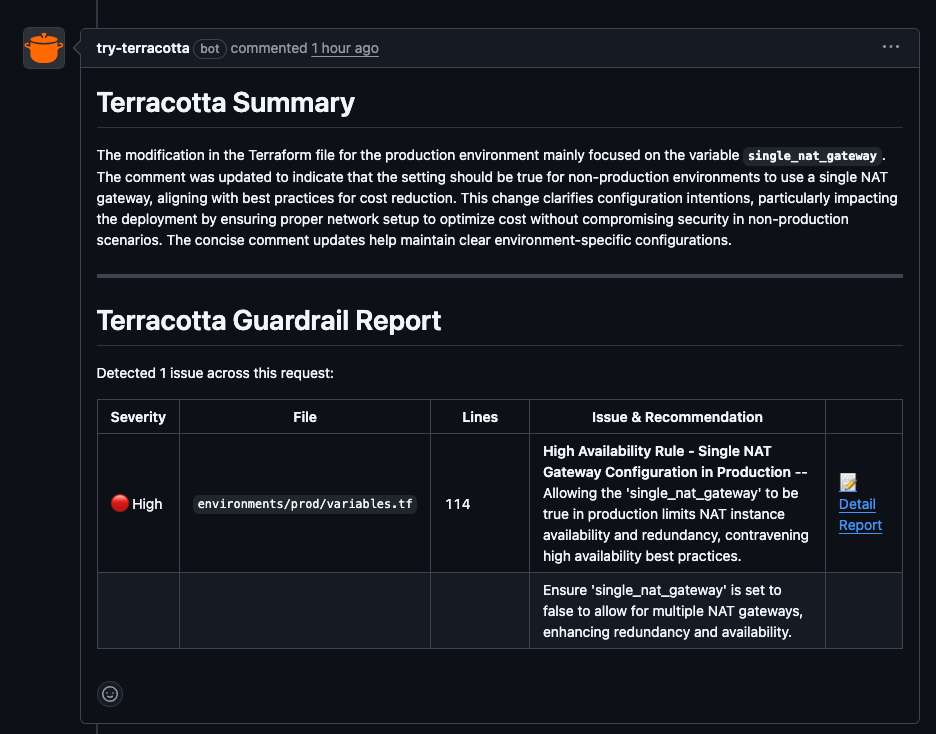

Step 3. Terracotta AI Immediately Flags the Violation Inside the PR

The PR shows:

- The guardrail name

- The reason it failed

- The risk involved

- Where to fix it

- What the correct value should be

No hunting through logs.

No Slack messages to the platform team.

No re-explaining the module.

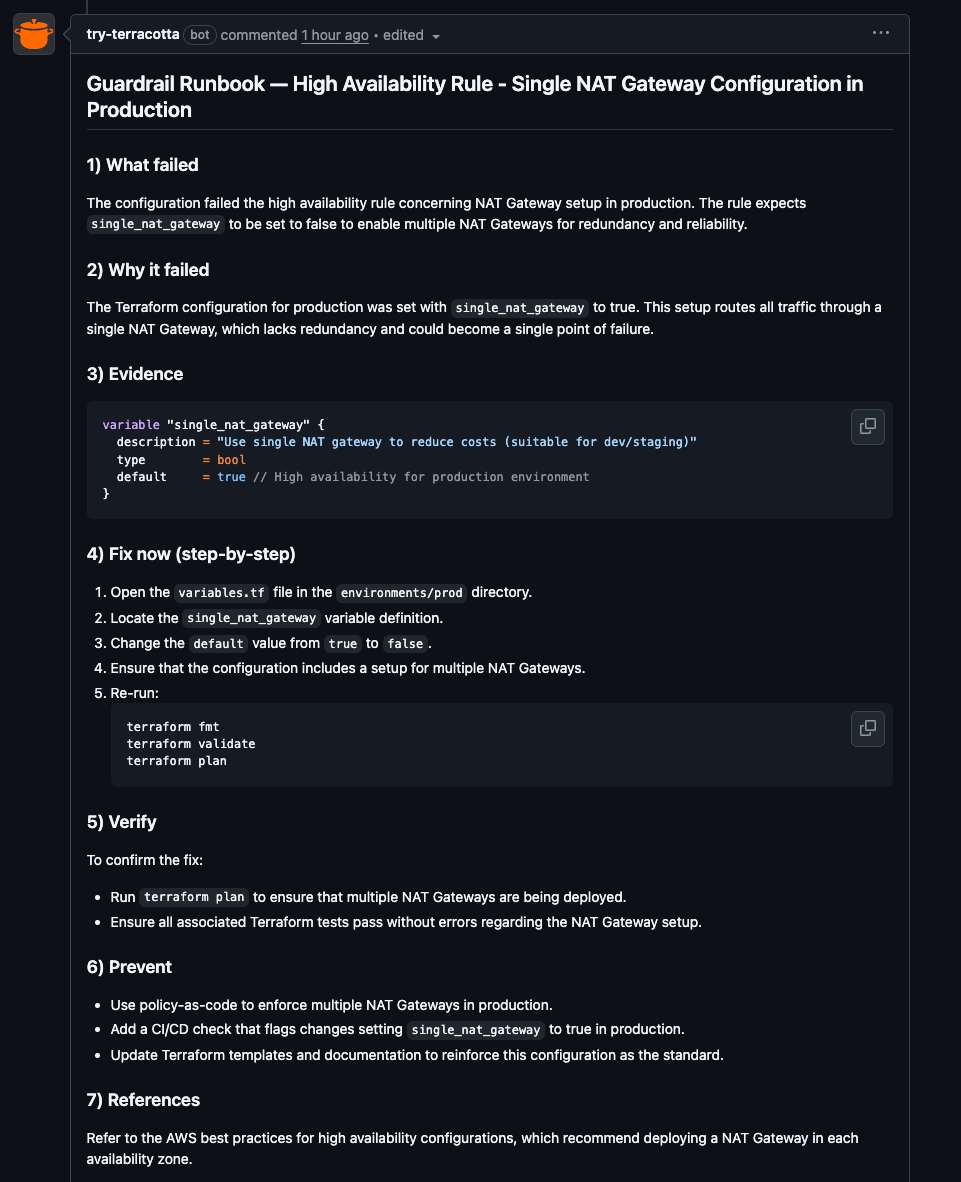

Step 4. The Detailed Report Creates a Runbook Automatically

This is the part developers love.

Terracotta explains:

- WHY the redundancy matters

- HOW the module is designed

- WHAT the correct setting is

- WHAT will break if it remains wrong

- HOW to apply the fix

This is effectively built-in documentation delivered when a developer needs it.

What Platform Teams Gain

Reduction in repeated Slack questions

- Developers get answers inside the PR instead of asking the platform team.

Enforcement of module intent without babysitting

- Policies run automatically for every change.

Consistency across environments and teams

- No more "team A uses version 1.2 with overrides, team B uses version 1.1, and team C hardcoded prod settings into staging."

Confidence that standards are followed

- Guardrails enforce the rules you care about before the change reaches CI or Terraform Cloud.

A single place to define best practices

- Module behavior, safety rules, and compliance standards all live together.

Shared understanding

- Developers finally understand why things are designed a certain way.

What Developers Gain

Clear explanations

- They understand the reasoning behind module choices, not just the syntax.

Safer changes

- They know immediately if a setting will break production.

Faster reviews

- Platform teams are no longer the bottleneck.

More autonomy

- They can use self-service infrastructure responsibly.

What This Means for Module Governance Going Forward

- Your modules do not need rewriting.

- Your developers do not need training programs.

- Your PR reviewers do not need more time.

- Your workflows do not need an overhaul.

You simply need a way to take the intent behind your modules and enforce it consistently where developers work: inside pull requests.

Terracotta AI gives platform teams the missing enforcement layer for module governance. It turns module expertise into guardrails. It prevents misuse before deployment. It protects production without slowing development.

This is what Terraform governance should have always felt like.

Interested in a custom demo for you and your team? Head over to https://tryterracotta.com/schedule-demo and let's chat!

About Terracotta AI

Terracotta AI turned Terraform pull requests into intent-driven, natural-language summaries, solving the final frontier of friction in self-service IaC within engineering orgs—the Platform Engineering and Developer dilemma.

Terracotta AI is a Y Combinator-backed and seed-funded startup building deterministic, infra-aware AI for Platform, DevOps, and SRE teams.

Comments ()